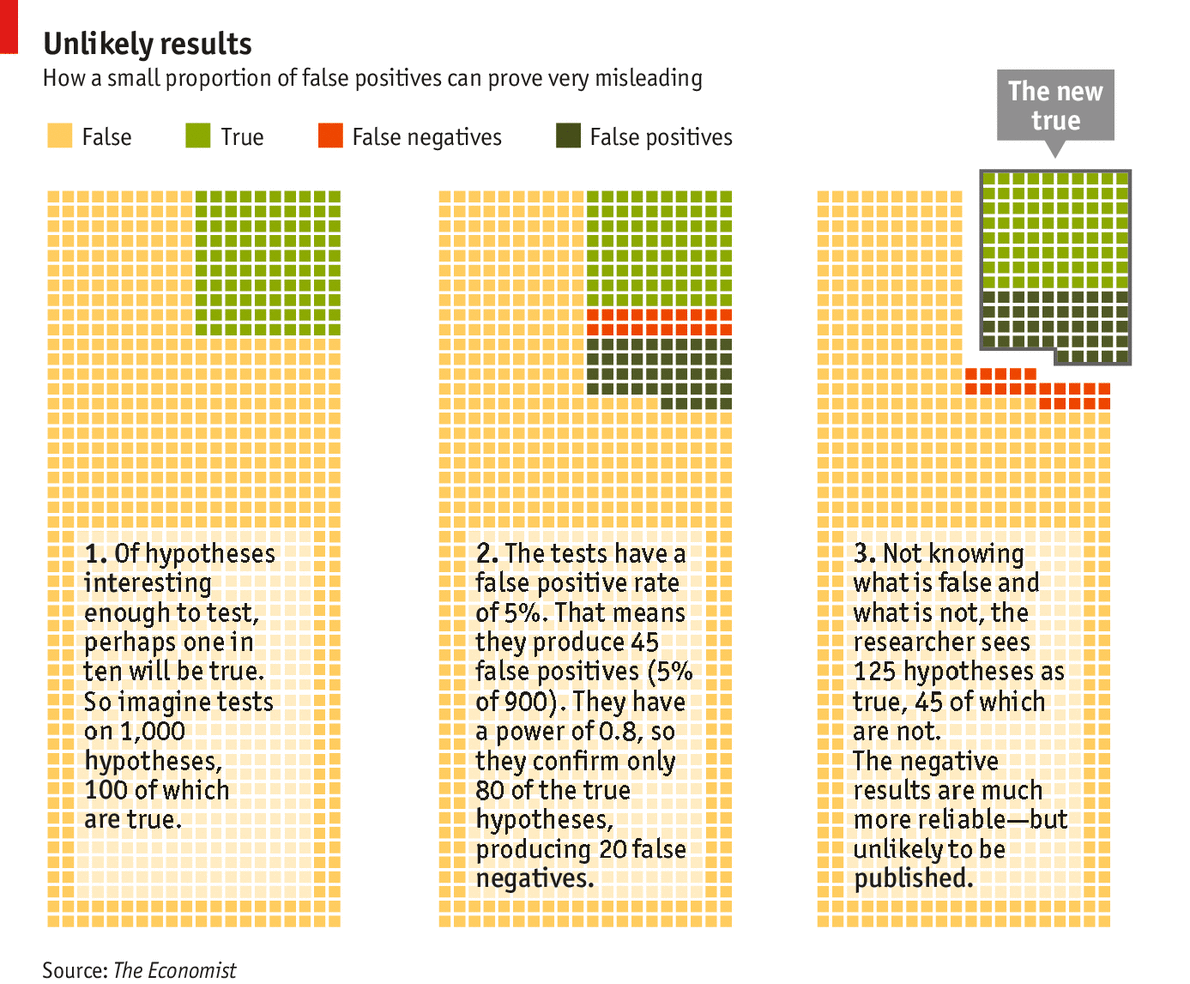

There is an interesting article in The Economist dealing with the validity of articles published in scientific and technological journals. I recommend that it be read completely. I quote one small portion (that assumes one in 20 incorrect hypotheses will be accepted):

(C)onsider 1,000 hypotheses being tested of which just 100 are true (see chart). Studies with a power of 0.8 will find 80 of them, missing 20 because of false negatives. Of the 900 hypotheses that are wrong, 5%—that is, 45 of them—will look right because of type I errors. Add the false positives to the 80 true positives and you have 125 positive results, fully a third of which are specious. If you dropped the statistical power from 0.8 to 0.4, which would seem realistic for many fields, you would still have 45 false positives but only 40 true positives. More than half your positive results would be wrong.

The article also states:

Fraud is very likely second to incompetence in generating erroneous results, though it is hard to tell for certain. Dr Fanelli has looked at 21 different surveys of academics (mostly in the biomedical sciences but also in civil engineering, chemistry and economics) carried out between 1987 and 2008. Only 2% of respondents admitted falsifying or fabricating data, but 28% of respondents claimed to know of colleagues who engaged in questionable research practices.Lets think about that for a moment. Assume that every author knows 14 others well enough to know whether or not each had ever falsified results; in that case, if 2% of authors do in fact falsify, wouldn't something like 28% of respondents know someone who had falsified?

The quote says "know of" not "know". Cases of detected falsification become quite famous, so many scientists would "know of" a scientist in the same or a closely related field who had been revealed to have falsified date, even if that guilty scientist was not known personally.

And of course, it is likely that some of the people believed to have falsified data will not have done so.

In an article suggesting you not believe everything you read in a scientific journal, The Economist's writers and editors seem to have committed the same kind of inexactitude of which they warn.

Watch the video explaining the idea from The Economist.

Postscript: It occurs to me that in the example, before the experiment the probability of the hypothesis being true seems to be 0.10; after the experiment it seems to be 0.64. Thus the a posteriori probability is much higher given the positive outcome of the experiment. I would say that kind of change merits publication as that would encourage others to further study the hypothesis and add information that would help confirm or challenge it. That is the way science is supposed to be done.

2 comments:

Your post script is brilliant. This is the way to think of any "scientific" results...the results make the hypothesis more likely.

Thank you for that very nice comment!

Post a Comment