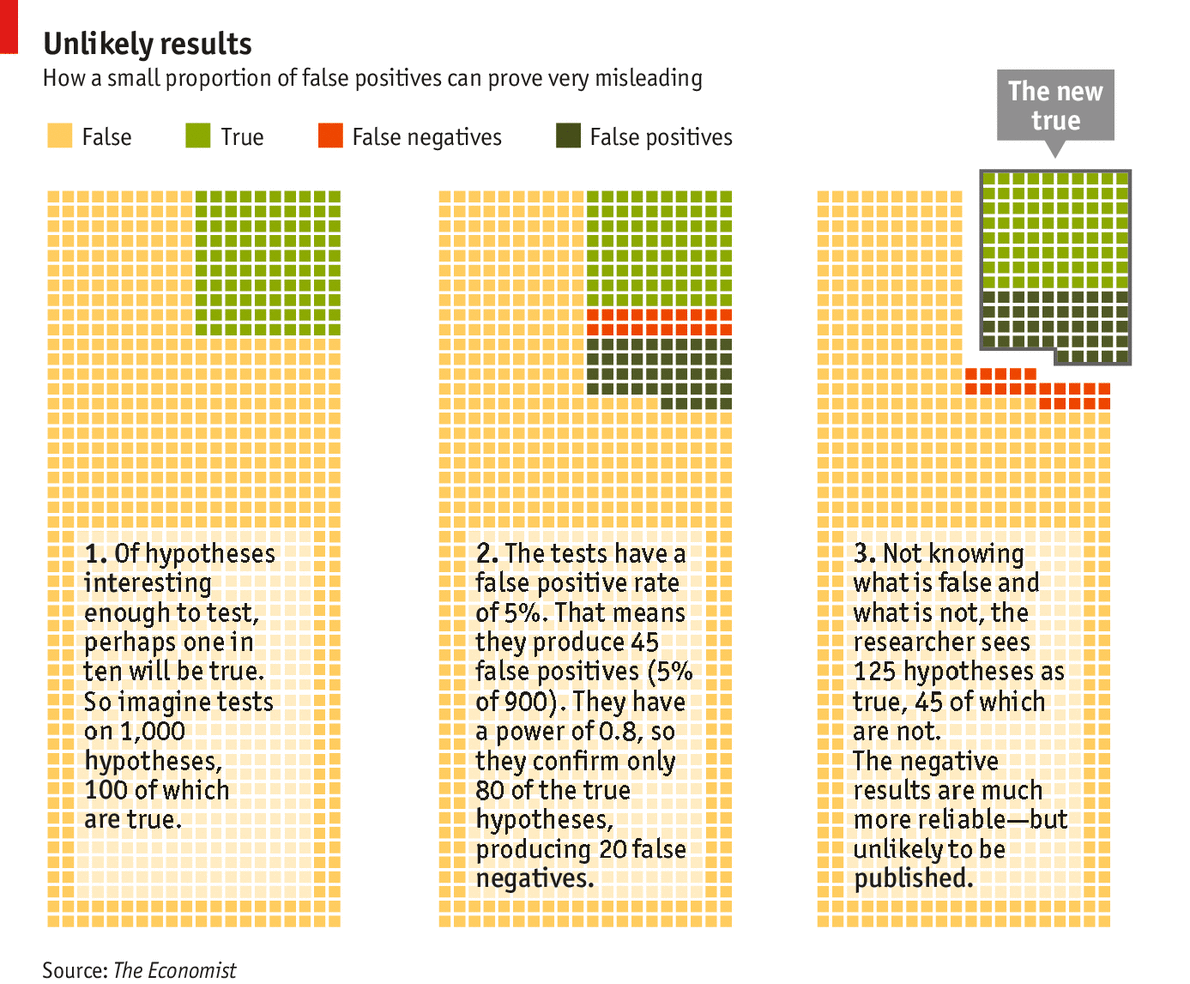

I recently commented on an article from The Economist. I quoted one small portion of the article (that assumes one in 20 incorrect hypotheses will be accepted):

(C)onsider 1,000 hypotheses being tested of which just 100 are true (see chart). Studies with a power of 0.8 will find 80 of them, missing 20 because of false negatives. Of the 900 hypotheses that are wrong, 5%—that is, 45 of them—will look right because of type I errors. Add the false positives to the 80 true positives and you have 125 positive results, fully a third of which are specious. If you dropped the statistical power from 0.8 to 0.4, which would seem realistic for many fields, you would still have 45 false positives but only 40 true positives. More than half your positive results would be wrong.

I noted that in the example, before the experiment the probability of the hypothesis being true seems to be 0.10; after the experiment it seems to be 0.64. Thus the a posteriori probability is much higher given the positive outcome of the experiment.

On the other hand, consider experiments with negative results. In the example, there would have been 20 false negatives and 855 true negatives. The a posteriori probability of the hypothesis being true would seem to be 20/875 or 0.02.

A positive experimental outcome with the characteristics noted indicates that the hypothesis tested seems much more likely to be true; a negative experimental outcome with the same characteristics suggests that the hypothesis that formerly seemed unlikely now seems still more unlikely.

Science advances by the accumulation of evidence and hypotheses are not supposed to be widely accepted until supported by a number of different experiments. Positive results from one experiment do not mean that a hypothesis is necessarily true, but suggest that hypothesis is especially worthy of more investigation. Negative results from one experiment mean that the hypothesis is less likely to be supported by further investigation.

Surely both positive and negative results should be available to the scientific community in our Internet age, but I see a rationale for publishing the positive results more widely.

Scientific journals may well be doing the right thing by focusing more on positive results. Such a publication might draw scientists to study the hypothesis who would not otherwise have done so.

On the other hand, it would be good if a scientist interested in testing a specific hypothesis could interrogate a database to find how many previous experiments had come up with positive and how many had come up with negative results.

No comments:

Post a Comment